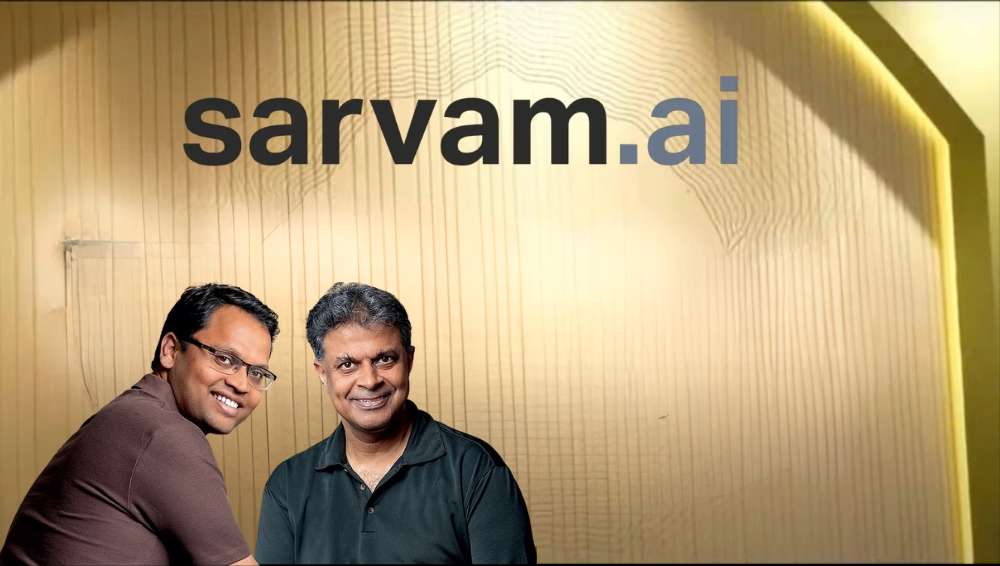

Sarvam AI unveils two foundational LLMs

19 Feb 2026, 07:00 PMSarvam AI was the first company selected under the central government's flagship IndiaAI Mission to build the country’s sovereign foundational AI model.

Team Head&Tale

Sarvam AI, which is backed by Peak XV Partners and Lightspeed, has unveiled two new foundational large language models (LLMs), marking a major push toward sovereign, homegrown artificial intelligence infrastructure.

The two models -- Sarvam-105B and Sarvam-30B -- were launched at the India AI Impact Summit New Delhi.

Sarvam-105B, the larger model, has 105 billion parameters and a 128,000-token window, giving it capacity for complex reasoning, long-document understanding and enterprise workflows.

Pratyush Kumar, co-founder Sarvam said the model outperformed the 600-billion-parameter DeepSeek R1, released last year, as well as Google’s Gemini 2.5 Flash on an Indian languages technical benchmark.

Sarvam-30B is a 30-billion-parameter large language model built to handle multilingual conversations, translation, summarisation and reasoning tasks.

Notably, Sarvam AI was the first company selected under the central government's flagship IndiaAI Mission to build the country’s sovereign foundational AI model.